Cloud and data centre virtualisation has brought unprecedented performance, efficiency and agility. With compute virtualisation and increased server workload density, server utilisation has improved dramatically in recent years.

The demand for data-intensive applications such as distributed storage and machine learning, combined with the increase in server utilisation, makes network interconnect performance the deciding factor for overall data centre performance. Software-defined overlay technologies are now bringing further agility and efficiency to the network. Using the correct interconnect technology will unleash the full performance potential of the virtualised data centre infrastructure.

Software-based open virtual switches (such as OVS) that run as part of the server hypervisors are a pivotal part of the overlay-based solution since the early days. Mellanox ConnectX-5 network adapter provides hardware-accelerated virtual switching capability with its ASAP2 technology.

ASAP2 works seamlessly with software controllers and provides switching capabilities while freeing up precious server cycles. With ConnectX-5 ASAP2, customers can build networks with software-defined network virtualisation, without compromising on infrastructure performance.

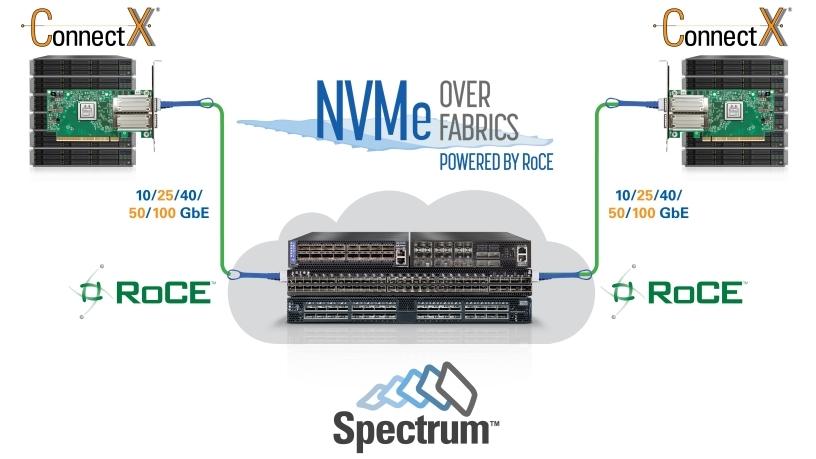

Storage architecture is also evolving to meet the latest cloud application performance demands. Scale-out storage systems are gaining popularity. Fast solid state drives (SSDs) are replacing legacy spinning drives. As workloads move to the cloud, fewer storage systems are using legacy protocols such as Fibre Channel. Newer and more efficient protocols such as NVMe and NVMe over Fabrics are being adopted to extract even more storage performance from the infrastructure.

The performance of scale-out systems is influenced by the locality of the data. Direct memory access technologies such as Remote Direct Memory Access and GPU Direct were invented to boost performance of accessing remote data. With these technologies, the endpoint hardware has direct access to both the remote and local memories. Hardware accelerated memory accesses operate at much higher speeds than traditional software-based CPU accesses. Faster endpoints need faster networks. 10/40GbE networks is no longer enough. We need 25/100GbE interconnects to deliver performance and future proof the data centre infrastructure.

Modern data centre application performance demands can be met using scale-out distributed architecture. Interconnect technology is a pivotal element of high performance distributed systems. Mellanox is a leading provider of high-performance, end-to-end interconnect solutions, distributed in Africa by value-added distributor, Networks Unlimited, and Pinnacle. In January 2018, Mellanox's ConnectX-5 Ethernet adapter was awarded the recipient of the Linley Group 2017 Analysts' Choice Award for Best Networking Chip.

Share

Editorial contacts